ETL #73–NULL or NOT NULL and SQL Interview Questions

Today is the day after Thanksgiving. There are many things to be thankful so I decided to write a short post today.

The first thing to be thankful is that Tomislav and I have completed the third edition of our MDX book, MDX with Microsoft SQL Server 2016 Analysis Services Cookbook. The book is published by Packt Publishing and has been uploaded to all the major publishing channels.

Recommend SQL interview questions on deskbright.com

The second thing to be thankful is the enthusiastic audience who have been faithfully reading my posts. I recently received two inquires, which are somehow related. One reader was a bit confused by what I said about the SQL NULL values and what I said about being careful of what we put in the WHERE clause. Another reader is from Deskbright.com, a new online learning platform. Deskbright.com offers a number of free online resources to help people learn business skills — such as SQL. They are wondering if I’d be willing to post a link to their page on SQL interview questions (https://www.deskbright.com/sql/sql-interview-questions/) on my site.

As I am browsing through the SQL interview questions on deskbright.com, I see the #3 question, “Why is this query not returning the expected results?”, and thought this is perfect for the question from the reader I mentioned previously. Instead of overwhelming readers, deskbright.com listed only 7 most common SQL interview questions.

I’d rather not to repeat what they have and would recommend their SQL interview questions to those of you who are still new to SQL or Business Intelligence.

MDX Cook book third edition

The full title of the book is MDX with Microsoft SQL Server 2016 Analysis Services Cookbook. By the time you see this post, the book should be on Amazon, PacktPub.com, and all other online technology book stores and the e-subscription sites.

See also

ETL #72–Your data can mysteriously disappear after a WHERE clause was added (2)

ETL #71–Your data can mysteriously disappear after a WHERE clause was added (1)

MDX – Putting Visual Totals on top or at the bottom

Dynamically totaling child members

Putting the dynamic totals on top or at the bottom can be a trivial or a big decision. Very often, visual tools reply on MDX queries to get the aggregation correct in the MDX query so that the need to aggregate in the visual tool is eliminated.

Suppose you need to display the sales for just Accessories and Clothing, and on the top you just want to show the aggregated sales value for Accessories and Clothing, as shown in the following example.

| Internet Sales Amount | |

| Total – All Products | $1,040,532.57 |

| Accessories | $700,759.96 |

| Clothing | $339,772.61 |

VisualTotals() function can be used to get visual totals

VisualTotals() function is a good choice to display the aggregated values for the result set (“visual” here implies that the aggregation will be only based on the visually seen results, not based on the entire cube space). The VisualTotals () function will allow you to show the totals on top or at the bottom, depending on where you put the All member.

This query using the VisualTotals () function will display the visual total on top. This is because the All Product member was the first in the named set.

WITH SET [Visual Total] AS

VisualTotals(

{ [Product].[Category].[All Products],

[Product].[Category].[Accessories],

[Product].[Category].[Clothing] }

,

'Total - *'

)

SELECT

{ [Measures].[Internet Sales Amount] } ON COLUMNS,

[Visual Total] ON ROWS

FROM

[MDXBook]

Let’s put the All member as the last member in the named set as the following:

WITH SET [Visual Total] AS

VisualTotals(

{ [Product].[Category].[Accessories],

[Product].[Category].[Clothing],

[Product].[Category].[All Products] }

,

'Total - *'

)

Now the visual total is shown at the bottom.

| Internet Sales Amount | |

| Accessories | $700,759.96 |

| Clothing | $339,772.61 |

| All Products | $1,040,532.57 |

Now the visual total is shown at the bottom.

As a matter of fact, you can display the totals anywhere. Try putting the All member in the middle, you will get the totals in the middle of the result set.

WITH SET [Visual Total] AS

VisualTotals(

{ [Product].[Category].[Clothing],

[Product].[Category].[All Products],

[Product].[Category].[Accessories] }

,

'Total - *'

)

Hierarchize doesn’t seem to be necessary if there is only one level

I’ve seen MDX queries that used the Hierarchize() function for the only purpose to put the visual totals at the bottom, using the POST options.

Hierarchize ( [Visual Total], POST ) ON ROWS

When using the POST option, the Hierarchize function sorts the members in a level using a post-natural order. In other words, child members precede their parents. Therefore the dynamic totals are at the bottom. If there is only one level in the result set, I don’t see the need to use the Hierarchize function since we can position the dynamic totals at the bottom by simply rearranging the set.

Check out this MSDN page to see the description of the VisualTotals() function:

Enormous response from the last promotional campaign

After I posted this blog MDX Cookbook is a popular book, I received another email from my MDX book publisher.

Hi Sherry,

Hope all is well with you.

Considering the enormous response we received for the last promotional campaign that ended on 12th March, would you be willing to continue the offer (50% OFF on the eBook of MDX with SSAS 2012 Cookbook) until mid-April? If yes, let me know the size specifications and I will send you the banner.

Awaiting your reply,

Regards,

Your publisher

I am very happy to give my readers another banner for 50% discount on the MDX with SSAS 2012 Cookbook until mid-April.

When a calculated measure is added NON EMPTY stopped working

We use NON EMPTY key word on the rows axis to remove rows with NULL values. It works only when all the measures are NULL. If any one of the measures has value, NON EMPTY key word will not be able to remove rows.

NON EMPTY on rows axis worked as expected

A co-worker recently worked on getting 30+ measures from multiple measure groups out from our production cube. The NON EMPTY key word on the rows axis worked as expected.

SELECT

{ [Measures].[measure 1],

....

{[Measures].[measure 30] } ON COLUMNS,

NON EMPTY

[Employee].[Employee Name].[Employee Name].MEMBERS

ON ROWS

FROM

[REPORTING_CUBE]

WHERE

( [Date].[Date View].[Month].&[201601])

The month on the WHERE clause is hardcoded in this blog, but this query is supposed to be executed in an OPENQUERTY with a dynamic month value. In order to attach a period key value to the month, my co-worker decided to also get the month period key in the same query. (Let’s put aside why the OPENQUERTY is used and whether the month period key is needed or not and let’s focus on the NON EMPTY.)

NON EMPTY stopped working when a calculated Period Key is added

In order to get the hidden month period key, he used the WITH MEMBER to create a query-scope measure:

WITH MEMBER [Measures].[Period Key] AS LEFT([Date].[Date View].CurrentMember.Member_Key, 6)

This [Measures].[Period Key] is then added to the columns axis.

SELECT

{ [Measures].[Period Key],

[Measures].[measure 1],

....

{[Measures].[measure 30] } ON COLUMNS,

The query at the point returns rows that more than doubled what it returned before with many of them showing all measures NULL, except the Period Key.

The NON EMPTY is obviously not working at this point. When you think about it, this actually makes sense. In the context of the query, the Period Key has value and should be the same for all the employees whether those measures are NULL or not. This explains why NON EMPTY cannot remove the rows even when all the measures are NULL, since [Measures].[Period Key] has value.

Below is a made-up example. It shows that we get rows back even when all measures are NULL.

| Period Key | Measure 1 | Measure 2 | Measure 3 | |

| Austin, D | 201601 | (null) | (null) | (null) |

| Avalos, K | 201601 | (null) | (null) | (null) |

| Baker, B | 201601 | (null) | 1,158.78 | (null) |

| Brooks, W | 201601 | 85.55% | 291.38 | 1 |

| Brown, L | 201601 | (null) | (null) | (null) |

| Cruz, P | 201601 | (null) | (null) | (null) |

| Cruz, S | 201601 | (null) | (null) | (null) |

NONEMPTY() function is a lot more flexible

The NONEMPTY() function is a lot more flexible and should work in this case. But the usual form of it, where one measure is given as the second parameter, would not work in this case.

NONEMPTY( [Employee].[Employee Name].[Employee Name].MEMBERS, [Measures].[measure 1] )

Use a named set as the second parameter for the NONEMPTY() function

We need to check for all the 30+ measures. The solution here is to use a named set as the second parameter.

NONEMPTY( [Employee].[Employee Name].[Employee Name].MEMBERS, [The Set] )

We will define the named set as the following to combine all 30+ measure together into a set:

WITH

SET [The Set] AS

{ [Measures].[measure 1],

...

[Measures].[measure 30] }

Putting all together, here is the final query that will only return the employees who have values in those 30+ measures.

WITH

SET [The Set] AS

{ [Measures].[measure 1],

...

[Measures].[measure 30] }

SELECT

{ [Measures].[Period Key],

[The Set] } ON COLUMNS,

NONEMPTY(

[Employee].[Employee Name].[Employee Name].MEMBERS,

[The Set]

) ON ROWS

FROM

[REPORTING_CUBE]

WHERE

( [Date].[Date View].[Month].&[201601])

In my made-up example, only the two rows that are in red and bold will be returned, Baker, B and Brooks, W.

Always provide set_expression2 in NonEmpty() function

On MSDN the NonEmpty() Function page shows the syntax as this:

NONEMPTY(set_expression1 [,set_expression2])

It implies that the second parameter is optional. Check out my old blog to understand why we should always provide set_expression2 in the NonEmpty() function.

Consider removing the NON EMPTY key word from the COLUMNS axis

You might have seen error message like this from a SQL job that pulls data from an OLAP cube using OPENQUERY:

The OLE DB provider “MSOLAP” for linked server “CUBE_LINK” indicates that either the object has no columns or the current user does not have permissions on that object.

When using linked server or writing MDX queries in SSRS reports to pull data from OLAP cube, consider removing the NON EMPTY key word on COLUMNS.

When there is no data in the result set, the NON EMPTY key word on COLUMNS will not return any columns. The linked server will send “object has no columns” error and the OPEMQUERY will fail.

Unless you truly want the OPEMQUERY to fail, consider removing the NON EMPTY key word from the COLUMNS axis.

Removing the NON EMPTY key word from the COLUMNS axis will allow the MDX to return the columns even when the query contains no data.

SELECT --NON EMPTY

{[Measures].[The Minutes]} ON COLUMNS

,NON EMPTY {

[SomeDimension].[EMPID].CHILDREN *

[Date].[Dates].[Month].&[201603] } ON ROWS

FROM TheCube

WHERE …

There are many tips in the book MDX with SSAS2012 Cookbook about how the NON EMPTY key word behaves. Since the book is organized not by MDX functions, but rather by subject areas, the best way to find these tips is to use the Index at the back of the book to find examples and tips in the book.

MDX Cookbook is a popular book

I cannot believe that I have not posted any blog since May 2015! It’s been a busy few months for my co-workers and I, working on an OLAP re-design project. From the re-design of the ETL processes, to the multi-dimensional model, to building the dimensions, measure groups and cube, to the hundreds of pages of MDX calculation scripts, I’ve learned so much in the last few months. Not mentioning converting all the SSRS reports in MDX queries, which is the part I enjoyed a lot.

MDX is not the only skill that BI/data warehouse developers need, but it’s without doubt, many find that it is a very difficult skill to master. On the other hand, MDX is a pretty easy skill to pick up. Tomislav Piasevoli is a master in MDX. His insight in MDX will bring you from a beginner to an experience MDX writer. It is such a privilege for me to bring the original one in SSAS 2008 to SSAS 2012.

If your work touches OLAP, you need to know something about MDX. One BI/data warehouse developer once said to me that MDX books are so few that he bought every (almost) MDX book on the market. Among the few MDX books available, MDX with SSAS 2012 Cookbook is a popular book. While working on our OLAP re-design project, writing MDX calculation scripts, and converting SSRS reports in MDX queries, my co-workers and I often consulted the MDX Cookbook.

I encourage you to take advantage this one time discount. The 50% discount (with code MDXAS50) can only apply to the eBook version, and is valid through March 12, 2016. I know many of us still prefer the paper book. But the discount is hard to beat.

The MDX with SSAS 2012 Cookbook link is as follows: http://bit.ly/20qjH0a

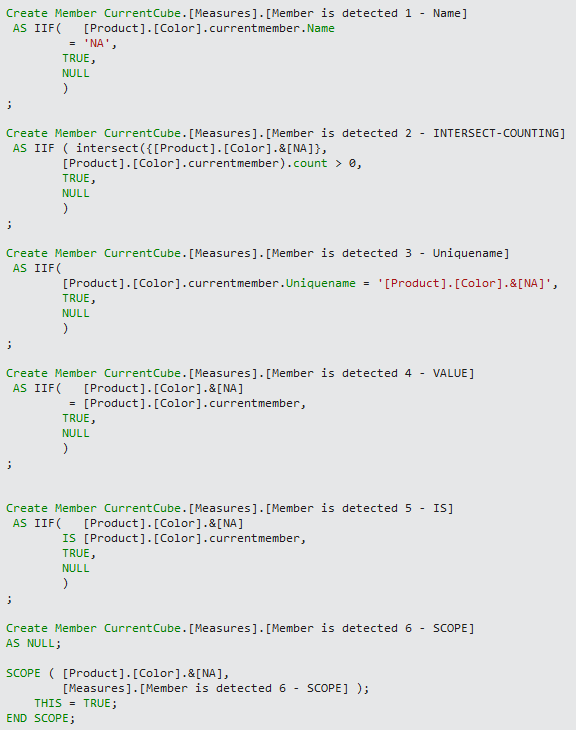

MDX #46–6 different ways to detect a particular member in MDX script

There are many great tips in MDX with SSAS 2012 Cookbook

The book MDX with SSAS 2012 Cookbook has many great tips for MDX script writers.

Here are two that are from Chapter 5 Navigation:

- detecting a particular member by comparing object with keyword IS is better than comparing name

- using SCOPE() statement in MDX script is a more “permanent” solution than calculations in MDX query

MDX script writers frequently need to include or exclude a particular member in a calculation. The first step is to determine the member exists in a hierarchy.

The book provided great recipes on how this can be done in MDX queries. In this blog, I’ll focus on how this can be done in MDX scripts.

If you have questions about the following concepts, please refer to Chapter 5 Navigation:

- Iteration on query axes

- Currentmember function

- IS keyword

- SCOPE() statement

- Member’s unique name

- Why we should avoid comparing names

- Why using SCOPE() statement is a better way in MDX script

6 different ways it can be done in MDX script

Suppose that we need to detect the NA member in the Color hierarchy of the Product dimension. The result should show us TRUE for color NA only.

| Color | Member is detected |

| Black | |

| Blue | |

| Grey | |

| Multi | |

| NA | TRUE |

| Red | |

| Silver | |

| Silver/Black | |

| White | |

| Yellow | |

| Assembly Components |

Here are 6 different ways it can be done in MDX script (there are more ways if you insist; see the screen shot below).

- Member is detected 1 – Name: compare the Name property of the current member

- Member is detected 2 – INTERSECT-COUNTING: intersect with the current member and then use Count()

- Member is detected 3 – Uniquename: compare the Uniquename property of the current member

- Member is detected 4 – VALUE: compare the full value of the current member

- Member is detected 5 – IS: use IS to compare member object

- Member is detected 6 – SCOPE: use SCOPE() statement

The solutions are increasingly better from 1 to 6, with number 1 being the worst, and number 6 much better. Avoid number 1 and 2; Number 3 is similar to number 4 and 5; Number 6 is a better choice in MDX script.

Become familiar with the SCOPE statement

Check out this link:

http://sqlbits.com/Sessions/Event8/Fun_with_Scoped_Assignments_in_MDX

Chris Webb did a wonderful job in this “Fun with Scoped Assignments in MDX” video.

ETL #72–Your data can mysteriously disappear after a WHERE clause was added (2)

Why a seemingly innocent WHERE clause can cause large amount of data missing

In the previous post, ETL #71–Your data can mysteriously disappear after a WHERE clause was added (1), I talked about how I discovered a WHERE clause was added to a main procedure that processes the raw data prior to loading the data into the fact table.

ecda1.[CUSTOM_DATA_8] <> ‘null’

A quick check of the raw data shows that out of 1.3+ million rows, there is only one row that has string value of ‘null’ in ecda1.[CUSTOM_DATA_8], and also that about 20% of the rows has SQL NULL value in the field CUSTOM_DATA_8. It’s these 20% of the data that was also filtered out along with the one ‘bad’ row.

The missing 20% of the data has SQL NULL in the field

The field [CUSTOM_DATA_8] on table alias ecda1 has one row that has a string value of ‘null’. But about 20% of rows has a value of SQL NULL.

We all know a thing or two about the SQL NULL:

- SQL NULL is the term used to represent a missing value.

- A NULL value in a table is a value in a field that appears to be blank.

- It is very important to understand that a NULL value is different than a zero value or a field that contains spaces.

IS NOT NULL is implied whenever we add a filter

But the most important thing about the SQL NULL is this: IS NOT NULL is implied in the WHERE clause whenever we add a filter of either equality or inequality.

So if we add a filter as:

ecda1.[CUSTOM_DATA_8] <> ‘aStringValue’

Then, we really mean this:

ecda1.[CUSTOM_DATA_8] <> ‘aStringValue’

AND

ecda1.[CUSTOM_DATA_8] IS NOT NULL

If we add a filter as:

ecda1.[CUSTOM_DATA_8] = ‘aStringValue’

Then, we really mean this:

ecda1.[CUSTOM_DATA_8] = ‘aStringValue’

AND

ecda1.[CUSTOM_DATA_8] IS NOT NULL

Now you can understand why those 20% of rows with SQL NULL values are missing.

Adding a WHERE clause during data processing in the production environment of ETL is almost never a good idea

You probably will also understand why it is not a good idea to add WHERE clauses to the data processing stage in ETL.

In production, WHERE clauses should not be added unless it’s a documented business requirement.

In data discovery stage, WHERE clauses are used only for data discovery purpose and should not be carried over into production.

Handling of “bad” or missing dimensional data

If these “bad” data are part of the data you will use to slice and dice your data, then there are many ways you can handle them. How to handle these “bad” or missing dimensional data is beyond the scope of this short blog.

ETL #71–Your data can mysteriously disappear after a WHERE clause was added (1)

Validations at end of ETL indicate missing data

At the end of each ETL job, I always run some sort of validation process to give me an indication that the data loading and processing are as expected or things have gone terribly wrong. The latter happened last Thursday when I received the validation result in my inbox.

Last Thursday also coincided with a “disk utilization” issue in the upstream server. So I waited until last Friday when the “disk utilization” issue was fixed in the upstream server to have the data reloaded. To my surprise, the data volume that was loaded on our side was still way below normal.

Steps to investigate missing data

It’s time to investigate. Blog writers at this point will usually “make the long story short”, I’ll do the same here, but with a few bullet points to highlight the steps I took to investigate.

- Pick one day for one employee: this is the most obvious data point to validate for my data. Our data contains detail data down to the granularity of per employee, per Interaction Resource ID, per connection ID, per 15 minutes interval per row. Picking one day for one employee will give me not too little and not too much data to check.

- Validate on the upstream source server: the table in question is a fact table and has a unique interaction resource fact ID. The unique list of the interaction resource fact ID is an obvious candidate as my data points for checking.

- Compare the interaction resource fact ID: between the upstream source server and our own server. Because the data volume is small enough, a simple query revealed that about 20% of the interaction resource fact IDs are not loaded into our own table.

- An ETL process design with an atomic operation in mind helps to pinpoint exactly where the problem might be: our fact tables usually are long and also wide, so it’s not very easy to visually see why these 20% of the data were not loaded. So it’s time to go to the step where the data was processed before the loading. Fortunately my ETL processes are designed with the atomic operation in mind, and I know exactly which step to look for the culprit.

- A WHERE clause was added to the processing stored procedure: a log entry in the procedure clearly says that a WHERE clause was added last Thursday. A quick running of the query inside the procedure shows that this WHERE clause filter out those 20% data.

ecda1.[CUSTOM_DATA_8] <> ‘null’

A quick check of the raw data shows that out of 1.3+ million rows, there is only one row that has the value ‘null’ in ecda1.[CUSTOM_DATA_8]. Then why 20% of the data were also filtered out along with the one ‘bad’ row?

Why a seemingly innocent WHERE clause can cause large amount of data missing

This post is getting longer than I wanted. I’ll stop now. In the next post, I’ll explain:

- Why the above WHERE clause not only filtered out one ‘bad’ row, but also took 20% of other rows along with it.

- Why in ETL, adding WHERE clause during data processing in production is almost never a good idea.

Validation of data loading and processing

One of the most often used validation method at the end of each ETL run is to run a cross reference checking on a couple of metrics, which entails finding two independent sources of the same metric.

Atomic Operation in ETL

Atomic operation, atomic programming, atomic database design, atomic transaction, etc., etc.. There are many explanations to these concepts. I am probably not qualified to give it a formal definition in the ETL design, but it’s a strategy that every ETL designer/developer should learn to practice. As an ETL designer/developer, our professional life depends on how well we understand the strategy and how well we apply it to every single task we design.

SQL #58–Where are my own templates in SQL Server Management Studio 2012

The secrete SQLFile.sql

We are all so used to clicking on the New Query icon (or the keyboard shortcut Ctrl+N). We know it will open a new query window in SSMS, an empty query window that is, but many of us never knew that something else was designed to happen before the new query window is opened.

I didn’t know that either until a co-worker told us a trick to open a new query window pre-filled with some code snippet. To the credit of my co-worker, here it goes about the secrete SQLFile.sql file.

“….earlier today about the upcoming OLAP environment and the need for standardization, and mentioned that I have a template that SSMS automatically launches every time that I open a new tab. The template includes a USE database statement, along with some verbiage for getting a CREATE PROCEDURE or VIEW statement started, and then a comment block, including Change History log, that would be part of the procedure or view.

I have found that it makes it much harder for me to leave out the comments, because I no longer have to open an old stored procedure to find a comment block with which to start.

This script is just something I threw together; please feel free to modify it to suit your needs (like, replacing my name w/yours).

Having said that, I hope we can come up with a standard template for all of us to use, with minimal differences from one developer to another, so that it will help us document our code as consistently as possible.”

To make this template available in SSMS 2008 (or R2), put SQLFile.sql in this folder:

C:\Program Files (x86)\Microsoft SQL Server\100\Tools\Binn\VSShell\Common7\IDE\SqlWorkbenchProjectItems\Sql

In SSMS 2012, put SQLFile.sql in this folder:

C:\Program Files (x86)\Microsoft SQL Server\110\Tools\Binn\ManagementStudio\SqlWorkbenchProjectItems\Sql

Once you put your code snippet in the secrete SQLFile.sql file, your new query window will no longer be empty. It will be pre-filled with your template.

Built-in script templates

SQL Server Management Studio by far is the best tool I’ve ever used. It’s features, such as the Object Explorer, the IntelliSense, the Registered Servers Explorer, and of cause the Template Explorer, are all powerful features that can significantly increase your productivity in SQL development.

In every version I used, SQL Server Management Studio always comes with a bunch of SQL (and MDX) script templates, including templates to create tables, views, stored procedures, triggers, statistics, and functions etc. All you need to do is to open the Template Explorer, by going to View menu > Template Explorer or jus simple using keyboard shortcut CTRL+ALT+T. You’ll see all the built-in templates, .

Create our own custom templates

What I really like about the template feature is that it allows us to create our own custom templates. Although the template feature allows you to auto-create code by filling out the template parameters, I know most SQL developers only use it to store their own code snippets, or team code templates, without bothering with the parameters.

But using the template feature is not without frustration. What frustrates me the most is to figure out where my own custom templates went.

Another mystery – where are my own code templates?

I am running Windows 7, here is the path where Microsoft put all the custom template files.

C:\Users\DefaultUser\AppData\Roaming\Microsoft\SQL Server Management Studio\11.0\Templates\Sql

On Windows 7, the AppData folder is a hidden folder. This certainly added another layer of secrecy to the whole template feature.

On Windows 7, to get the hidden folders to show, enter this command in the command window. This is much faster than opening the Control Panel and looking for the right program wrapped in several folders.

Folder Options

Then choose Show hidden files, folders, and drives in the View tab, as shown in the following screenshot.

Easy to use after all

After all the mysteries are solved, the template feature in SSMS is extremely easy to use and very user friendly.

- Create a folder: this option allows you to create your own folders under the root folder mentioned previously. Create as many folders or sub-folders to better organize your scripts.

- Create Template: this option allows you create your template with your own code.

- Open Template: this option allows you use the pre-filled code in your template.

- Edit Template: this option allows you modify the code in your template.